Recognize Faces in Live Streaming Video using Amazon Kinesis & Rekognition

Amazon Rekognition is an AWS service for image & video analysis. It can identify objects, people, text, scenes, & activities. It also provides facial analysis, face comparison, & face search capabilities. You can detect, analyze, & compare faces for a wide variety of use cases, including user verification, cataloging, people counting, & public safety. We will build one such system in this article.

Use Cases

As you can imagine, the ability to detect faces in realtime can be put to use in many places: from intruder alert systems to allowing employee access into office buildings, or tracking employee or student attendance in offices & schools. Most of us already use face recognition to unlock our phones. For more such use cases, check out FaceFirst’s list of 21 Amazing Uses for Face Recognition.

What are we building?

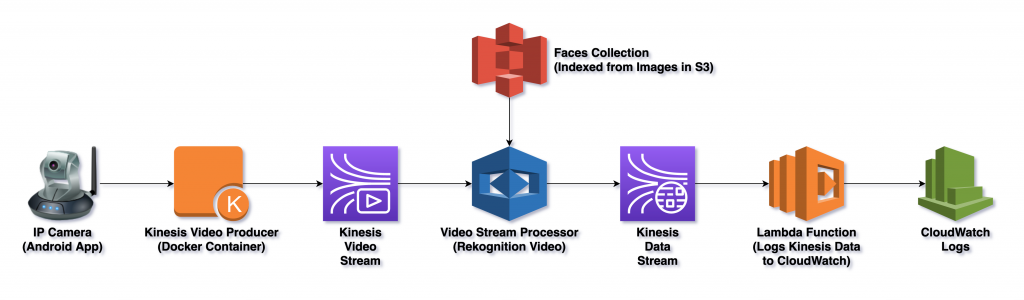

What we want is to build a system that can ingest live streaming video, analyze it in realtime, detect faces & inform us of it. We will use Amazon Kinesis Video Streams to ingest the live streaming video & Amazon Rekognition Video to detect faces. The architecture is as follows:

Let us walk through the entire flow once (from left to right above), before we start building individual components. The system works as follows:

- An IP camera app installed on your phone captures live video & serves it on your local WiFi network.

- The Kinesis video stream producer app running in a Docker container on your computer, pulls the video stream from the app via an RTSP URL & pushes it to a Kinesis video stream in AWS.

- A Rekognition video stream processor pulls video from the Kinesis video stream, looks for faces in it (already indexed in a Rekognition faces collection) & publishes its findings to a Kinesis data stream.

- A Lambda function fetches the results from the Kinesis data stream & logs them to CloudWatch.

Before You Begin

There are several key aspects of this system you should know before you start building it:

- The Rekognition video streaming API is only available in 5 regions: Virginia, Oregon, Tokyo, Frankfurt & Ireland.

- Use CloudPing.co to find the closest supported region to you. I’m near Mumbai so I chose Frankfurt. You’ll see

eu-central-1as the chosen region throughout this article.- Side note: All AWS CLI command outputs shown in this article are in YAML, instead of the default JSON.

- CloudFormation does not support Rekognition & Kinesis video streams so you won’t be able to automate the creation of these resources.

Now that you’re aware of the gotchas, let’s start building!

Faces Collection

We’ll start by uploading images of people who should be recognized in the video. For testing, you can upload a single image of yours in a bucket. Then create a Rekognition collection:

aws rekognition create-collection \

--collection-id my-rekognition-collection

CollectionArn: aws:rekognition:eu-central-1:123456789012:collection/my-rekognition-collection

FaceModelVersion: '5.0'

StatusCode: 200Then, index the image you uploaded into this collection:

aws rekognition index-faces \

--collection-id my-rekognition-collection \

--image '{"S3Object":{"Bucket":"my-rekognition-faces-bucket","Name":"HarishKM.jpg"}}'Rekognition will return a lot of details about the face:

FaceModelVersion: '5.0'

FaceRecords:

- Face:

BoundingBox:

Height: 0.7871654033660889

Left: 0.2684895694255829

Top: 0.21874068677425385

Width: 0.5820778012275696

Confidence: 99.99998474121094

FaceId: bade4904-120b-4f88-8b91-9e220609fa13

ImageId: e59b074a-6e91-3607-9129-ce9e06d5ecd7

FaceDetail:

BoundingBox:

Height: 0.7871654033660889

Left: 0.2684895694255829

Top: 0.21874068677425385

Width: 0.5820778012275696

Confidence: 99.99998474121094

Landmarks:

- Type: eyeLeft

X: 0.3342727720737457

Y: 0.5150454640388489

- Type: eyeRight

X: 0.596184253692627

Y: 0.48627355694770813

- Type: mouthLeft

X: 0.3805276155471802

Y: 0.7633035182952881

- Type: mouthRight

X: 0.5991687178611755

Y: 0.7384595274925232

- Type: nose

X: 0.489641398191452

Y: 0.6344687938690186

Pose:

Pitch: 7.072747230529785

Roll: -5.585822105407715

Yaw: 2.5462570190429688

Quality:

Brightness: 76.02369689941406

Sharpness: 78.64350128173828

UnindexedFaces: []Kinesis Streams & IAM Role

Next, create a Kinesis video stream & a Kinesis data stream with their default configuration. Note down their ARNs. We’ll need them next.

Since Rekognition would be reading from Kinesis video & writing to Kinesis data, it needs permissions to do so. So head on over to the IAM console & create an IAM role. Select Rekognition as the service that will be assuming this role. Finish creating it with the defaults. Now detach the IAM policy attached to it & attach a new inline IAM policy as shown below:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"kinesis:PutRecord",

"kinesis:PutRecords"

],

"Resource": "arn:aws:kinesis:eu-central-1:123456789012:stream/my-kinesis-data-stream"

},

{

"Effect": "Allow",

"Action": [

"kinesisvideo:GetDataEndpoint",

"kinesisvideo:GetMedia"

],

"Resource": "arn:aws:kinesisvideo:eu-central-1:123456789012:stream/my-kinesis-video-stream/1616144781359"

}

]

}Lambda Function

Create a Node.js 14 Lambda function with this code:

exports.handler = async event =>

event.Records.forEach(record =>

console.log(new Buffer(record.kinesis.data,

'base64').toString('ascii')))Add the Kinesis data stream as a trigger to this Lambda function.

Rekognition Video Stream Processor

Now let’s create the stream processor. This is the heart of the system. It pulls video from Kinesis video, analyzes it & pushes results to Kinesis data:

aws rekognition create-stream-processor \

--name my-rekognition-video-stream-processor \

--role-arn arn:aws:iam::123456789012:role/my-rekognition-role \

--settings '{"FaceSearch":{"CollectionId":"my-rekognition-collection"}}' \

--input '{"KinesisVideoStream":{"Arn":"arn:aws:kinesisvideo:eu-central-1:123456789012:stream/my-kinesis-video-stream/1616144781359"}}' \

--stream-processor-output '{"KinesisDataStream":{"Arn":"arn:aws:kinesis:eu-central-1:123456789012:stream/my-kinesis-data-stream"}}'

StreamProcessorArn: arn:aws:rekognition:eu-central-1:123456789012:streamprocessor/my-rekognition-video-stream-processorNext, start the stream processor:

aws rekognition start-stream-processor \

--name my-rekognition-video-stream-processorThis does not return any output so check the stream’s status by running aws rekognition list-stream-processors. It should be running. If not, it’s most likely a permission issue with the IAM role you created earlier.

StreamProcessors:

- Name: my-rekognition-video-stream-processor

Status: RUNNINGIP Camera

We’re almost ready to stream but before that, install IP Webcam from the Google Play store. Once done, open it & make the following settings:

- Set

Main cameratoFront camerainVideo preferences. - Set

Video resolutionto640x480inVideo preferences. - Set

Audio modetoDisabled. - Tap

Start server.

Note the IP of your phone displayed on screen. Your Phone & computer should be connected to the same WiFi network.

Start Streaming!

This is where the magic happens!

Instead of building a Kinesis video producer from scratch, you can use a Docker container I’ve built & published with the producer ready to go. First, pull it:

docker pull kmharish/kvs-producerIt’s a HUGE image (3 GB)! So give it a while to download.

Once that’s done, run it like so:

docker run \

--network host \

-e AWS_DEFAULT_REGION=eu-central-1 \

-it kmharish/kvs-producer \

<aws-access-key-id> \

<aws-secret-access-key> \

rtsp://<android-ip>:8080/h264_pcm.sdp \

my-kinesis-video-streamLeave it running & open your video stream in the Kinesis console. Open Media playback to see the video streaming live from your phone to AWS!

Who’s on Camera?

As soon as you see your video in Kinesis console, the Rekognition stream processor has started its analysis behind the scenes. Within minutes, it should have pushed quite a bit of data to the Kinesis data stream. Open the Lambda function’s logs in CloudWatch. It should have logged something like this:

{

"InputInformation": {

"KinesisVideo": {

"StreamArn": "arn:aws:kinesisvideo:eu-central-1:123456789012:stream/my-kinesis-video-stream/1616144781359",

"FragmentNumber": "91343852333289682796718532614445757584843717598",

"ServerTimestamp": 1510552593.455,

"ProducerTimestamp": 1510552593.193,

"FrameOffsetInSeconds": 2

}

},

"StreamProcessorInformation": {

"Status": "RUNNING"

},

"FaceSearchResponse": [

{

"DetectedFace": {

"BoundingBox": {

"Height": 0.075,

"Width": 0.05625,

"Left": 0.428125,

"Top": 0.40833333

},

"Confidence": 99.975174,

"Landmarks": [

{

"X": 0.4452057,

"Y": 0.4395594,

"Type": "eyeLeft"

},

{

"X": 0.46340984,

"Y": 0.43744427,

"Type": "eyeRight"

},

{

"X": 0.45960626,

"Y": 0.4526856,

"Type": "nose"

},

{

"X": 0.44958648,

"Y": 0.4696949,

"Type": "mouthLeft"

},

{

"X": 0.46409217,

"Y": 0.46704912,

"Type": "mouthRight"

}

],

"Pose": {

"Pitch": 2.9691637,

"Roll": -6.8904796,

"Yaw": 23.84388

},

"Quality": {

"Brightness": 40.592964,

"Sharpness": 96.09616

}

},

"MatchedFaces": [

{

"Similarity": 88.863960,

"Face": {

"BoundingBox": {

"Height": 0.557692,

"Width": 0.749838,

"Left": 0.103426,

"Top": 0.206731

},

"FaceId": "ed1b560f-d6af-5158-989a-ff586c931545",

"Confidence": 99.999201,

"ImageId": "70e09693-2114-57e1-807c-50b6d61fa4dc",

"ExternalImageId": "HarishKM.jpg"

}

}

]

}

]

}The last line above shows the image that matched the face!

Cleanup

After you’ve played with it to your heart’s content, it’s time to destroy it all! Follow these steps:

- Stop the Docker container (the Kinesis producer) & close the IP camera app.

- Stop & delete the stream processor:

aws rekognition stop-stream-processor --name my-rekognition-video-stream-processoraws rekognition delete-stream-processor --name my-rekognition-video-stream-processor

- Delete the Lambda function & the Kinesis video & data streams.

- Delete the Rekognition faces collection:

aws rekognition delete-collection --collection-id my-rekognition-collection

- Delete the S3 bucket.

References

This was not an easy project! I’m very grateful to the people who have tried something like this before me & wrote about it online. I referred the following resources to build this system:

- Amazon Rekognition developer guide: Working with streaming videos

- AWS samples on GitHub: Amazon Rekognition video analyzer

- Medium article by ZenOf.AI: Real Time Face Identification on Live Camera Feed using Amazon Rekognition Video and Kinesis Video Streams

- Medium article by Matt Collins: Facial Recognition with a Raspberry Pi and Kinesis Video Streams

About the Author ✍🏻

Harish KM is a Principal DevOps Engineer at QloudX. 👨🏻💻

With over a decade of industry experience as everything from a full-stack engineer to a cloud architect, Harish has built many world-class solutions for clients around the world! 👷🏻♂️

With over 20 certifications in cloud (AWS, Azure, GCP), containers (Kubernetes, Docker) & DevOps (Terraform, Ansible, Jenkins), Harish is an expert in a multitude of technologies. 📚

These days, his focus is on the fascinating world of DevOps & how it can transform the way we do things! 🚀

while runing docker facing this please help

[INFO ][2021-06-06 08:22:17] Using region: us-west-2

[INFO ][2021-06-06 08:22:17] Using aws credentials for Kinesis Video Streams

[INFO ][2021-06-06 08:22:17] No session token was detected.

[INFO ][2021-06-06 08:22:17] createKinesisVideoClient(): Creating Kinesis Video Client

[INFO ][2021-06-06 08:22:17] heapInitialize(): Initializing native heap with limit size 134217728, spill ratio 0% and flags 0x00000001

[INFO ][2021-06-06 08:22:17] heapInitialize(): Creating AIV heap.

[INFO ][2021-06-06 08:22:17] heapInitialize(): Heap is initialized OK

[DEBUG][2021-06-06 08:22:17] stepStateMachine(): PIC Client State Machine – Current state: 0x1, Next state: 0x2

[DEBUG][2021-06-06 08:22:17] getSecurityTokenHandler invoked

[DEBUG][2021-06-06 08:22:17] Refreshing credentials. Force refreshing: 0 Now time is: 1622967737797275672 Expiration: 0

[INFO ][2021-06-06 08:22:17] New credentials expiration is 1622970137

[DEBUG][2021-06-06 08:22:17] stepStateMachine(): PIC Client State Machine – Current state: 0x2, Next state: 0x10

[INFO ][2021-06-06 08:22:17] createDeviceResultEvent(): Create device result event.

[DEBUG][2021-06-06 08:22:17] stepStateMachine(): PIC Client State Machine – Current state: 0x10, Next state: 0x40

[DEBUG][2021-06-06 08:22:17] clientReadyHandler invoked

[DEBUG][2021-06-06 08:22:17] Client is ready

[INFO ][2021-06-06 08:22:17] Creating Kinesis Video Stream IDS

[INFO ][2021-06-06 08:22:17] createKinesisVideoStream(): Creating Kinesis Video Stream.

[DEBUG][2021-06-06 08:22:17] stepStateMachine(): PIC Stream State Machine – Current state: 0x1, Next state: 0x2

[DEBUG][2021-06-06 08:22:17] stepStateMachine(): PIC Client State Machine – Current state: 0x40, Next state: 0x40

[INFO ][2021-06-06 08:22:20] writeHeaderCallback(): RequestId: 96b5441d-9b1b-4b2e-89e0-ebea3d983288

[WARN ][2021-06-06 08:22:20] curlCompleteSync(): HTTP Error 403 : Response: {“message”:”The security token included in the request is invalid.”}

Request URL: https://kinesisvideo.us-west-2.amazonaws.com/describeStream

Request Headers:

Authorization: AWS4-HMAC-SHA256 Credential=ASIAUP5QC2GV2GDKW2HE/20210606/us-west-2/kinesisvideo/aws4_request, SignedHeaders=content-type;host;user-agent;x-amz-date, Signature=df1eb460956914a7eaa3cccb505e9e5a5a53eac54e65681e14f0e174edc6e420

content-type: application/json

host: kinesisvideo.us

[DEBUG][2021-06-06 08:22:20] describeStreamCurlHandler(): DescribeStream API response: {“message”:”The security token included in the request is invalid.”}

[INFO ][2021-06-06 08:22:20] describeStreamResultEvent(): Describe stream result event.

[DEBUG][2021-06-06 08:22:20] defaultStreamErrorReportCallback(): Reported streamError callback for stream handle 94401511077001. Upload handle 18446744073709551615. Fragment timecode in 100ns: 0. Error status: 0x52000010

[DEBUG][2021-06-06 08:22:20] streamErrorHandler invoked

[ERROR][2021-06-06 08:22:20] Reporting stream error. Errored timecode: 0 Status: 1375731728

[WARN ][2021-06-06 08:22:20] continuousRetryStreamErrorReportHandler(): Reporting stream error. Errored timecode: 0 Status: 0x52000010

[ERROR][2021-06-06 08:22:47] createKinesisVideoStreamSync(): Failed to create Kinesis Video Stream – timed out.

[INFO ][2021-06-06 08:22:47] freeKinesisVideoStream(): Freeing Kinesis Video stream.

[DEBUG][2021-06-06 08:22:48] defaultStreamShutdownCallback(): Reported streamShutdown callback for stream handle 94401511077001

[ERROR][2021-06-06 08:22:48] Unable to create Kinesis Video stream. IDS Error status: 0xf

[INFO ][2021-06-06 08:22:48] Freeing Kinesis Video Stream IDS

[INFO ][2021-06-06 08:22:48] freeKinesisVideoStream(): Freeing Kinesis Video stream.

[ERROR][2021-06-06 08:22:48] Failed to initialize kinesis video.

[INFO ][2021-06-06 08:22:48] freeKinesisVideoClient(): Freeing Kinesis Video Client

This Docker image is quite old. Try creating & using a new Docker image with the latest version of Kinesis Video Stream Producer: https://docs.aws.amazon.com/kinesisvideostreams/latest/dg/producer-sdk.html