Reduce Inter-AZ Data Transfer Cost in Amazon EKS

Table of Contents

- Introduction

- Where Exactly Does Network Traffic Cross Zones?

- ALB Cross-Zone Load Balancing

- Routing Traffic in Ingress Controller

- Topology Aware Routing

- References

- About the Author ✍?

Introduction

Operating Amazon’s Elastic Kubernetes Service (EKS) at scale comes eith its own challenges. Having done this for several years now, we here at QloudX have encountered (and overcome) many such issues! One such challenge is keeping the inter-AZ data transfer cost down. AWS charges for network traffic flowing between EKS nodes (EC2 instances) located in different availability zones.

In our case, with an EKS cluster running 1000+ pods, many of which are microservices communicating with each other, inter-AZ data transfer cost made up 30-40% of our EKS bill at one point. Running your EKS cluster in a single zone, at least in non-production environments, completely eliminates this problem. If that’s not feasible for you, this article describes a few ways to keep inter-AZ cost down in a multi-AZ EKS cluster.

Where Exactly Does Network Traffic Cross Zones?

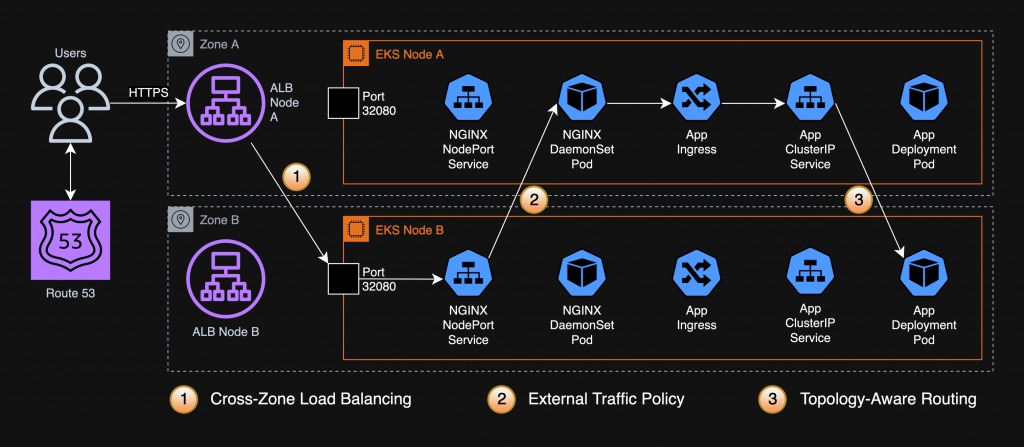

Consider the diagram below showing the flow of a network request from a user’s browser to an EKS pod. Network traffic can cross zones at points 1, 2 & 3. This is one possible architecture of a typical EKS deployment. Even if you don’t use the same components or config in your EKS, the solutions proposed here to reduce inter-AZ traffic might still work for you.

The rest of this article describes the proposed solutions in detail.

ALB Cross-Zone Load Balancing

The nodes for your load balancer distribute requests from clients to registered targets.

AWS Documentation > How Elastic Load Balancing Works > Cross-Zone Load Balancing

- When cross-zone load balancing is enabled, each load balancer node distributes traffic across the registered targets in all enabled Availability Zones.

- When cross-zone load balancing is disabled, each load balancer node distributes traffic only across the registered targets in its Availability Zone.

If you have an Application Load Balancer (ALB) fronting your EKS cluster & can guarantee the availability of at least 1 replica of your app in each AZ, turn off cross-zone load balancing on the ALB to reduce some inter-AZ data transfer.

Routing Traffic in Ingress Controller

We use NGINX as our ingress controller. It’s deployed as a DaemonSet with NodePort services. The following solution should work even if you use another ingress controller in a similar (NodePort service) configuration.

External Traffic Policy of NodePort Services

Kubernetes services have a property: spec.externalTrafficPolicy = Cluster or Local:

- When set to

Cluster(default), services route external traffic to pods across the cluster - When set to

Local, services route external traffic only to pods on the node where the traffic originally arrived

Setting NGINX service’s external traffic policy to Local would prevent a network hop of incoming traffic, either to another node or another zone.

Caveat: Client IP of Incoming Traffic

When traffic reaches a pod, the source IP of that traffic from the pod’s perspective is:

- The “true” source IP if the traffic came via a service with traffic policy

Local - The IP of the EKS node where the traffic originally arrived (before it was re-routed to the pod’s node), if the traffic came via a service with traffic policy

Cluster

Since EKS here is behind an ALB, no pod will ever see the “true” source IP. Every app must extract it from the X-Forwarded-For HTTP header.

Questions / Considerations

Q: Since this change only affects network traffic from NGINX services to NGINX pods, not from application services to application pods, how much reduction in inter-AZ traffic can you realistically expect?

A: Since ALL incoming traffic goes through NGINX, it’s worthwhile to test traffic policy Local on it. Formula to calculate traffic reduction: ( AZ Count – 1 ) x ( ELB Incoming Bytes / AZ Count )

Considering the effect traffic policy has on client source IP:

Q: How would the traffic policy change affect NGINX itself?

A: No effect.

Q: How would the traffic policy change affect apps that receive traffic from NGINX?

A: No effect to downstream apps.

Q: Some of our app ingresses carry NGINX-specific annotations. Which annotations might break when changing traffic policy?

A: Generally speaking, traffic policy shouldn’t affect any NGINX behavior (annotation). Set traffic policy to Local in non-prod & monitor for potential issues.

Q: Why isn’t external traffic policy Local available for ClusterIP services?

A: Internal traffic policy is available for ClusterIP services but should not be used unless you can guarantee the presence of an app pod on the current node.

Q: Should you consider setting external traffic policy to Local for other NodePort services fronting DaemonSets?

A: No, external traffic policy only makes sense for NodePort services directly behind a load balancer, so only NGINX in this case.

Q: When the NGINX ingress controller is undergoing a rolling update (DaemonSet pods on every node are being replaced), does traffic policy local cause any dropped incoming traffic, like if the pod on that node is being replaced?

A: Yes, traffic will drop (momentarily)!

Q: What if a service’s external traffic policy is set to Local but none of the service’s backing pods are on the current node?

A: In this case, the incoming traffic will be dropped! Even though Kubernetes sees that all pods that can handle the traffic are on other nodes, the service will NOT route incoming traffic to other pods on other nodes.

Q: Kubernetes services load balance incoming traffic. Do services fronting DaemonSets also load balance by default (send incoming traffic to pods on other nodes) even though DaemonSet guarantees that there will always be a pod on the current node?

A: Yes, by default (traffic policy = Cluster), services fronting DaemonSets will also load balance traffic & send traffic off-node if needed. Having a pod on the current node doesn’t mean that all traffic reaching that node will be routed to this pod, unless you set traffic policy to Local.

Topology Aware Routing

Topology Aware Routing provides a mechanism in Kubernetes to help keep network traffic within the zone where it originated. It’s enabled on a Kubernetes service by setting its service.kubernetes.io/topology-mode annotation to Auto.

Since TAR cannot be enabled globally at a cluster-level, we need a way to annotate every service with the topology-mode annotation. Enter Kyverno! Kyverno is a policy engine for Kubernetes. A mutating Kyverno policy like the following can ensure every service always has the annotation:

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: topology-aware-services

spec:

mutateExistingOnPolicyUpdate: true

rules:

- name: topology-aware-services

match:

all:

- resources:

kinds:

- Service

mutate:

targets:

- apiVersion: v1

kind: Service

patchStrategicMerge:

metadata:

annotations:

+(service.kubernetes.io/topology-mode): autoReferences

Monitoring and Optimizing EKS Inter-AZ Data Transfer Cost — AWS re:Post

External traffic policy — Kubernetes documentation

Internal traffic policy — Kubernetes documentation

Preserving client source IP — Kubernetes documentation

External Traffic Policies and Health Checks — DigitalOcean documentation

Topology-Aware Routing — AWS Blog

Topology-Aware Routing in EKS — AWS re:Post

Optimize AZ traffic costs using Karpenter & Istio — AWS Blog

About the Author ✍?

Harish KM is a Principal DevOps Engineer at QloudX. ???

With over a decade of industry experience as everything from a full-stack engineer to a cloud architect, Harish has built many world-class solutions for clients around the world! ??♂️

With over 20 certifications in cloud (AWS, Azure, GCP), containers (Kubernetes, Docker) & DevOps (Terraform, Ansible, Jenkins), Harish is an expert in a multitude of technologies. ?

These days, his focus is on the fascinating world of DevOps & how it can transform the way we do things! ?